Reusable Delivery Pipelines with Dagger

it works on my computer

For lots of reasons, your CI environment is likely different to the environment you write code in locally. You’ve probably got unique environment variables, different libraries, it’s probably a different OS, more than likely a different CPU architecture (ARM64?). These things compound to create a fundamentally altered execution environment, how can you be sure what you test locally is what goes through CI?

I really enjoy using the different variations of developing in a container; Devcontainers with VSCode, Devpod, Gitpod, Github Codespaces, etc, etc. Control the development environment, onboard people quicker, it builds here, it builds there! Another take on this concept is controlling the execution environment for tests, builds, anything that might happen in your CI workflow.

Dagger.io lets you use Go, Typescript, Python to write delivery pipelines that will run anywhere there is a Docker engine. Docker is table stakes for many developers now. Dagger leverages Buildkit for aggressive caching and reproducibility.

I’ve got a couple of personal projects which are small, boring and don’t have interesting pipelines, so I tried writing some Dagger flows with them. You can take a look if you like - hhtpcd/dockerfiles.

Get Dagger installed with the instructions in the docs, or download one of the binary releases from Github.

Initialise your project, not a problem if it’s an existing directory. This just sets up the language prerequisites. I’ve chosen Typescript.

1dagger init --sdk=typescript

I’ve got an sdk/ directory that has the Dagger SDK, and importantly

./dagger/src/index.ts that I’m going to write my pipeline functions in.

If you’ve ever touched a Dockerfile a lot of the functions and calls that you will chain together in Dagger feel very familiar. The concept of multi-stage builds is very apparent.

I wrote a function that builds the docker/cli project because I wanted to use

the latest version of it.

1@object()

2class dockerfiles {

3 @func()

4 buildCli(goos: string, goarch: string, version: string): Container {

5 let repoSrc = dag

6 .git("https://github.com/docker/cli", { keepGitDir: true })

7 .tag(version)

8 .tree();

9

10 return dag

11 .container()

12 .from("golang:1.21-bullseye")

13 .withMountedDirectory("/go/src/github.com/docker/cli", repoSrc)

14 .withWorkdir("/go/src/github.com/docker/cli")

15 .withEnvVariable("DISABLE_WARN_OUTSIDE_CONTAINER", "1")

16 .withEnvVariable("CGO_ENABLED", "0")

17 .withEnvVariable("GOOS", goos)

18 .withEnvVariable("GOARCH", goarch)

19 .withExec(["make", "binary"]);

20 }

21}

We can build this function using the dagger cli.

1dagger call build-cli --goos linux --goarch amd64 --version v26.1.3

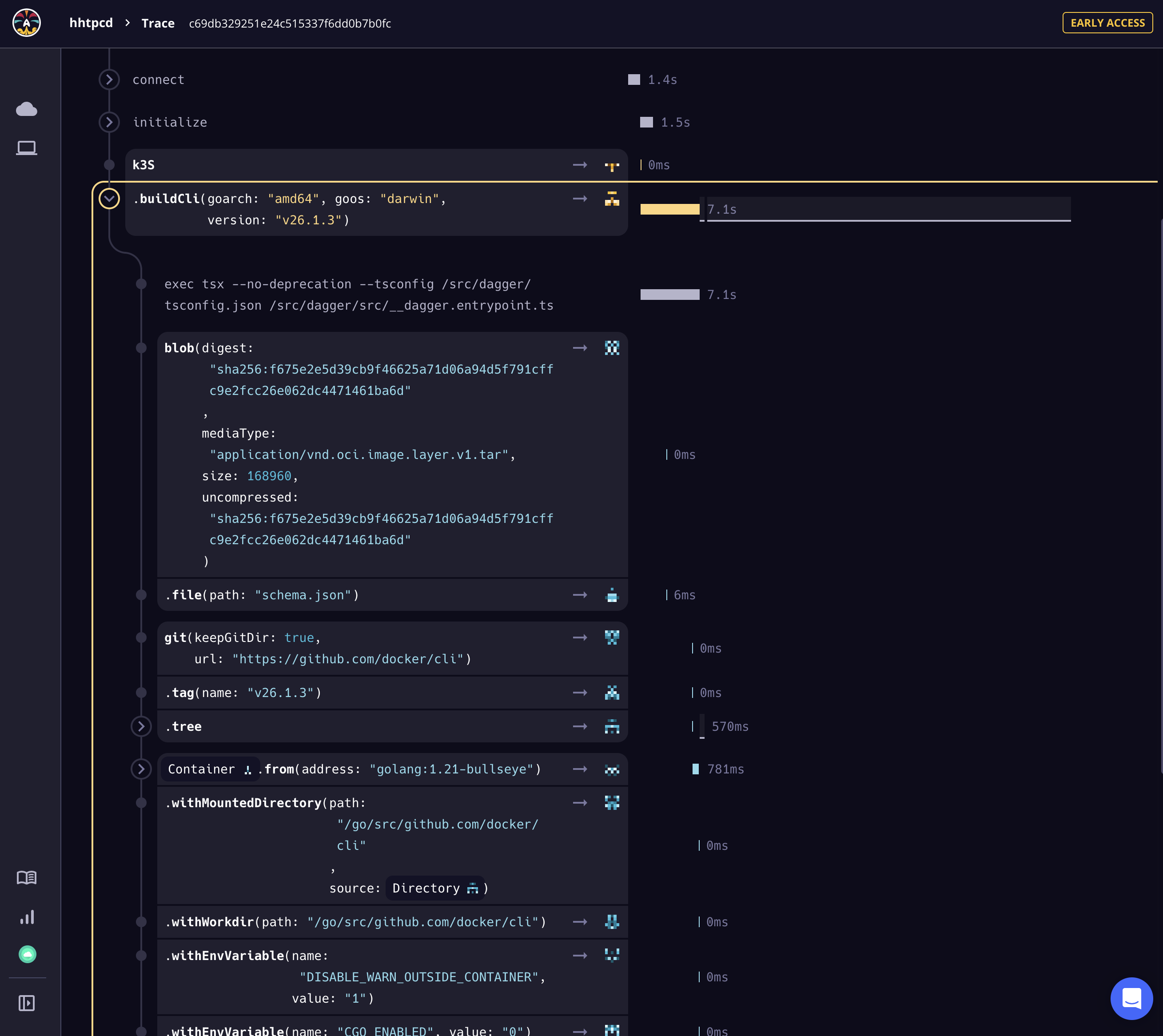

This executes in a beautiful TUI that is a live stream of a trace. You can zoom in, and out, and pan around. I cannot do justice to how much fun it is to watch.

We can chain built-in Dagger functions

to create more complex pipelines and flows. Lets pull out the binary from the

container I just built into the ./build directory. Here we use directory to

select the source path to export, and export to set the destination.

1dagger call build-cli \

2 --goos linux \

3 --goarch amd64 \

4 --version v26.1.3 \

5 directory --path=/go/src/github.com/docker/cli/build \

6 export --path=./build

Once it’s done, which honestly even for a short build like this, takes quite a while. There seems to be a lot of preparation, setting up. It is cached but it’s still not that fast. I’m very willing to consider that I am doing it wrong or that Docker on Mac is just super slow. Brief tests on a Linux server seem to be faster, but I expect a lot more developing is happening on Macs not Linux.

Even better is you can export your traces to Dagger cloud and it’s more glorious than the TUI. This is one of my favourite UIs for exploring single traces. I’d take this over AWS Xray every, single, day of the week.

This is about as far as I’ve got with Dagger. At $JOB we run a strict supply chain and it’s not clear to me yet if we could specify where it pulls the SDK or other dependencies from. If so there is likely more of an enterprise use case here. But that might not be a focus of Dagger right now.

The typing, intellisense and rich experience you get from programming in a language like Typescript is marvellous vs bash scripts, Makefiles and Dockerfiles. The appeal is immediately obvious and benefits clear. The helper functions in Dagger for caching, environment variables, sourcing, outputting are equally great. Tools like Dagger lower the barrier for controlling the environment for your CI considerably. I can’t see why you wouldn’t take on tools like this now.